I didn't write that much this year... here's why

It hurts a little to see that, counting this one, I only published three articles this year. It’s not that I didn’t have time to write. It’s that everything in tech, especially in AI, has been moving so fast that my thoughts seemed to change every week. What I believed I knew or mastered felt outdated a few days later.

One of the main reasons I didn’t feel like writing was the constant sense of unease I’ve felt while watching the AI space evolve. And not because of the usual reasons people mention. Companies automating workflows and laying people off is sadly predictable during any technological leap. It’s not good, but it’s part of the cycle: we either learn new skills or we risk being replaced.

What truly concerns me is how easily LLM prompts are starting to replace actual critical and analytical thinking. Social media is full of teachers furious at students turning in fully AI-generated essays. There was even a case of a large consulting firm submitting a report filled with references to sources that didn’t exist, because no one bothered to verify what the model produced.

I’ve seen the same trend in software engineering. Colleagues, Senior Engineers who used to write excellent code, have turned into “TAB… TAB… TAB… ACCEPT” machines, relying on tools like Cursor or Claude Code and pushing generated code without auditing it. Is the code correct? Does it use that third-party API efficiently? If a major consultancy can submit an unchecked AI-generated report to a client, why wouldn’t developers push unchecked AI-generated code to production? In fact, I’ve already seen it happen at work. The amount of technical debt being introduced this way is alarming.

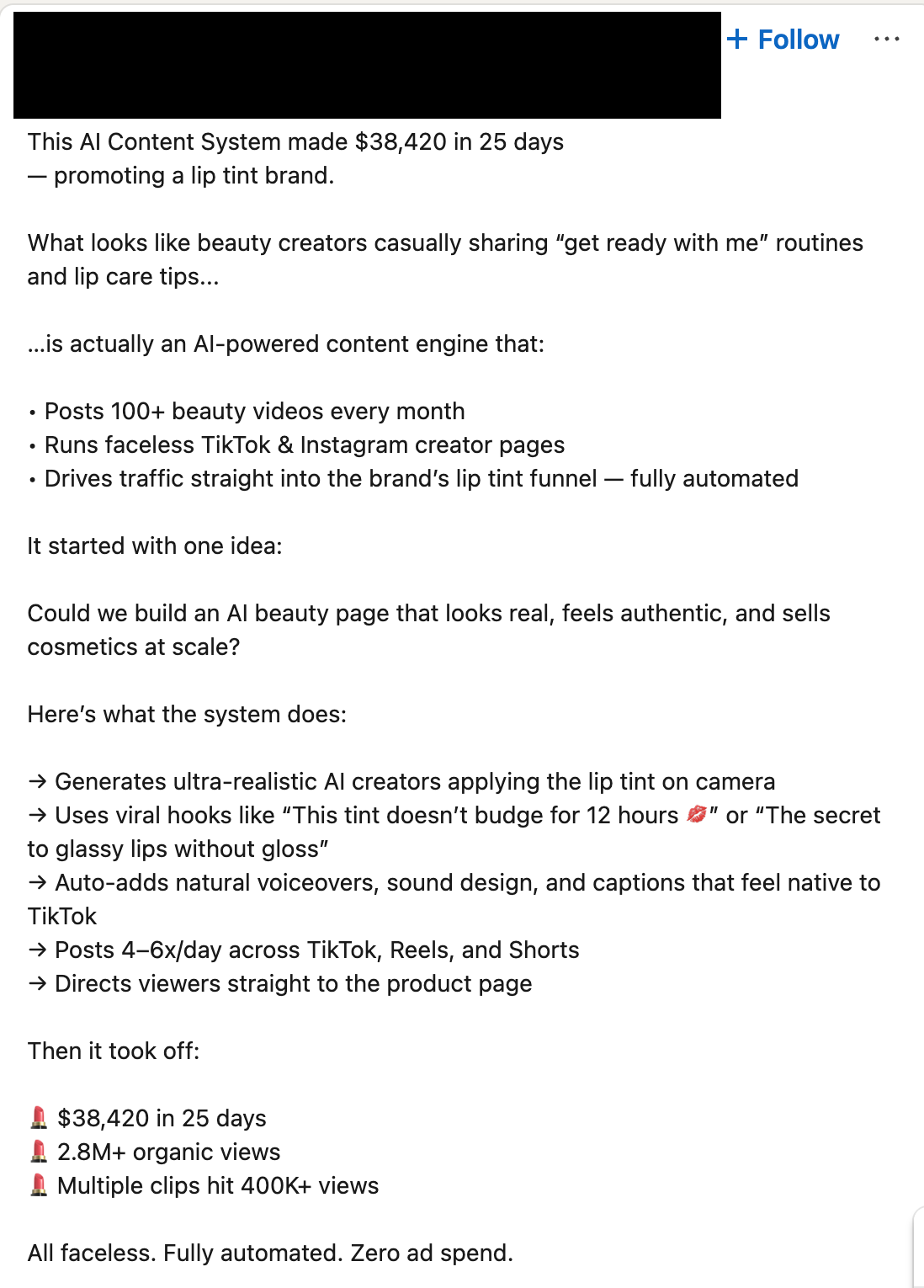

But the moment that bothered me the most this year didn’t come from bad code or a massive AI-induced outage. It was from the way AI-generated media is being used. A post popped up on my LinkedIn feed about a beauty brand promoting makeup and skincare products using AI-generated influencers.

Tomorrow it could be pharmaceuticals or fintechs. I am sure one day we will hear: “I’m taking these vitamins because an AI influencer told me it works wonders for its liver”. These influencers will say whatever the company wants, because they aren’t real, and hundreds of them can be generated instantly. Is that an ethical use of AI? I don’t think so. And I’ve seen people do similar things for other products. Fake AI-generated product reviews are everywhere now. That, too, feels wrong.

The irony is that I actually like AI. I love building AI tools. Coding with AI has genuinely made me more productive. But watching how people and companies misuse it has made me hesitant to talk about it at all. It pushed me into my shell.

So this year, instead of writing, I focused more on learning for myself, reevaluating career decisions, reclaiming some family time I had lost due to travel, and building a few things with the new tools I’ve been exploring. I also spent time organizing content I’ve wanted to publish for a long while. If all goes well, I’ll start sharing it next year, hopefully someone will find it useful.

I hope to write more in 2026.